An active ensemble classifier for detecting animal sequences from global camera trap data

Abstract

Camera traps can generate huge amounts of images, and thus reliable methods for their automated processing are in high demand: in particular to find those images or image sequences that actually include animals. Automatically filtering out images that are empty or contain humans can be challenging, as images can be taken in different landscapes, habitats and light. Weather and seasonal conditions can vary greatly. Most of the images can be empty, because cameras using passive infrared sensors (PIR) trigger easily due to moving vegetation or rapidly varying shadows and sunny spots. Animals in images are often hiding behind vegetation, and camera traps will see them from previously unseen angles. Therefore, conventional animal image detection methods based on deep learning need huge training sets to achieve good accuracy.

We present a novel background removal approach based on movement masked images computed using sequences of images. Our deep vision classifier uses these movement images for classification instead of the original images. Additionally, we apply a deep active learning (active learning for deep models) for collecting training samples to reduce the number of annotations required from the user.

Our method performed well in singling out image sequences that actually include animals, thus filtering out the majority of images that were empty or contained humans. Most importantly, the method performed well also for backgrounds and animal species not seen in the training data. Active learning brought good separation between classes already with small training sets, without the need for laborious large-scale pre-annotation.

We present a reliable and efficient method for filtering out empty image sequences and sequences containing humans. This greatly facilitates camera trapping research by enabling researchers to restrict the task of animal classification to only those image sequences that actually contain animals.

Check the full text

Mononen, T., Hardwick, B., Alcobia, S., Barrett, A., Blagoev, G. A., Boyer, S., ... & Ovaskainen, O. (2025). An active ensemble classifier for detecting animal sequences from global camera trap data. Methods in Ecology and Evolution.

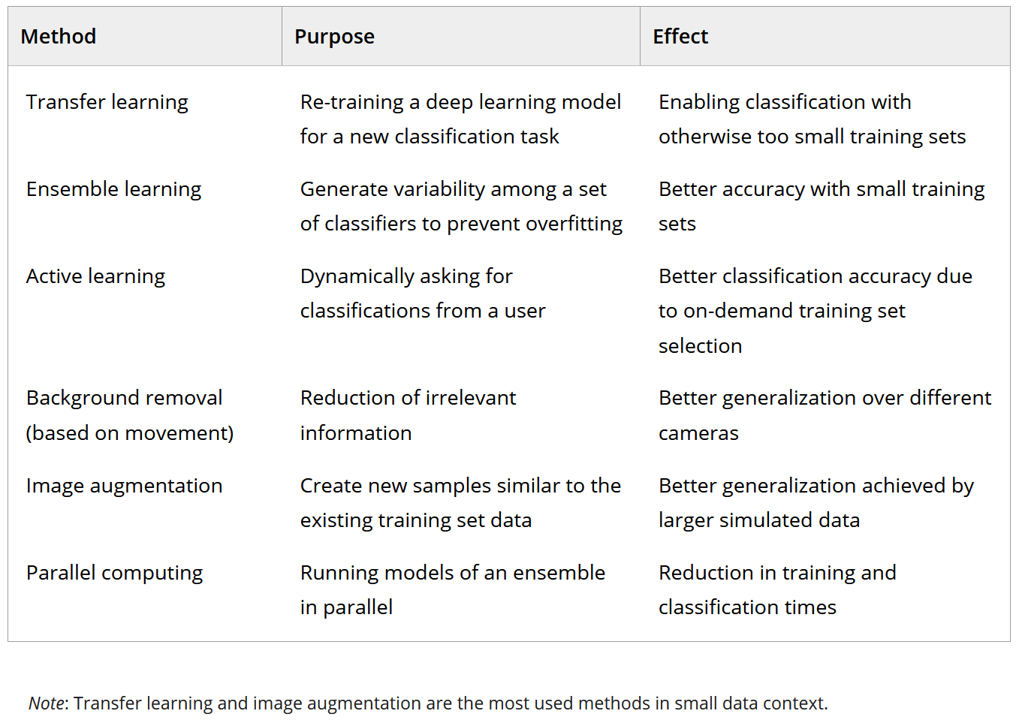

TABLE 1. The main methods that are used in our classifier to deal with very limited training data without pre-annotated training data.

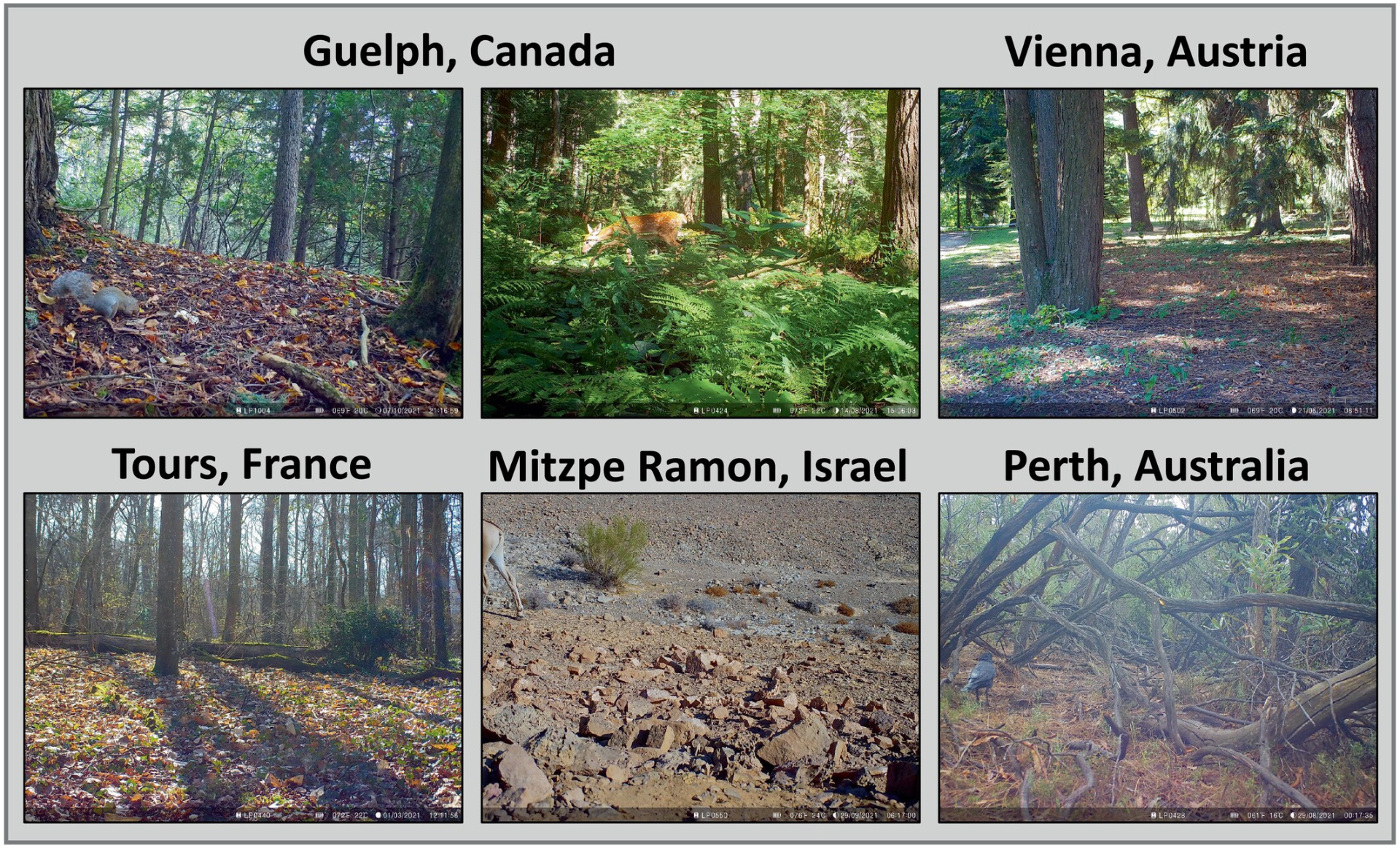

Figure 1. Camera views showing different habitat types used in training and tests. Thickness of vegetation varies within sites and from one season to the other. In the top row, the leftmost and rightmost images show the two cameras (G1 and V1) used in the initial training of two filters.

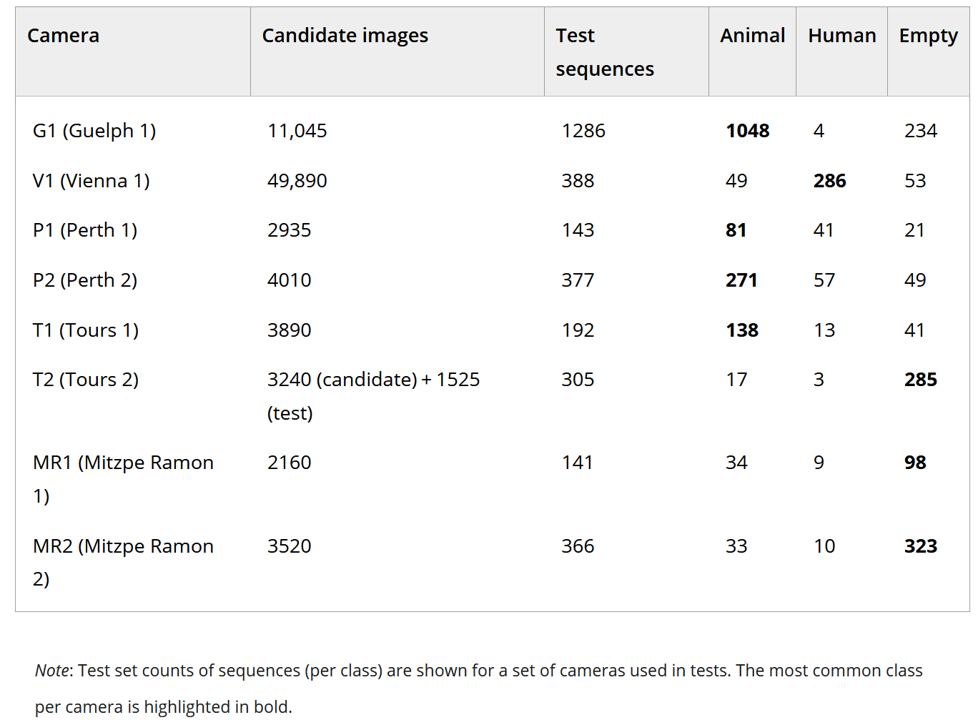

TABLE 2.

Candidate image and test sequence counts.

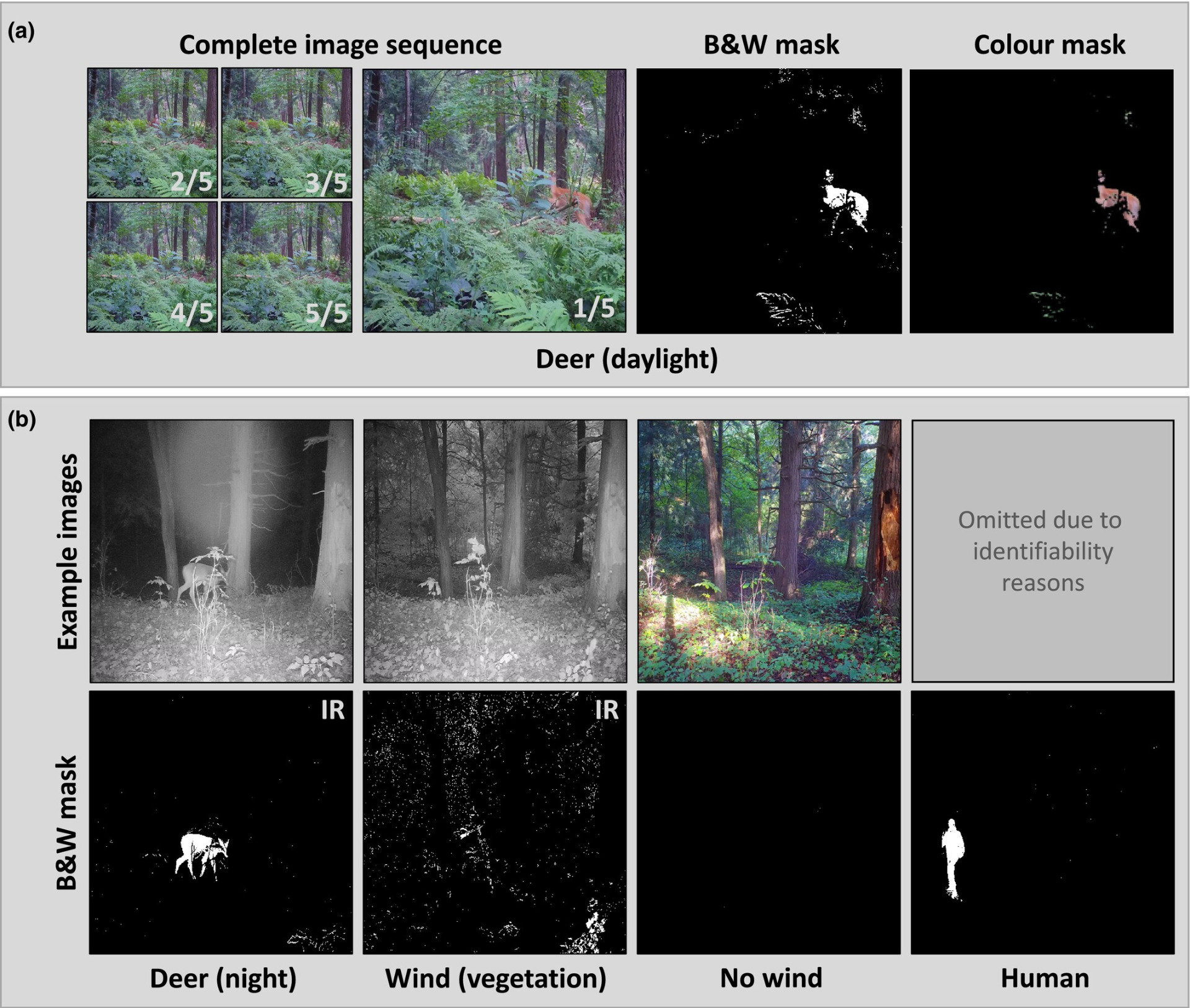

Figure 2. (a) An example image sequence with the corresponding B&W and colour masks for the first image in the sequence showing only a moving deer. (b) Examples of other movement-filtered images. Each column shows a scaled original image and the corresponding B&W mask. Each of these examples is a selected single image from a complete sequence of five images. Animals behind vegetation will pop out in masks and look the same at different locations and habitats.

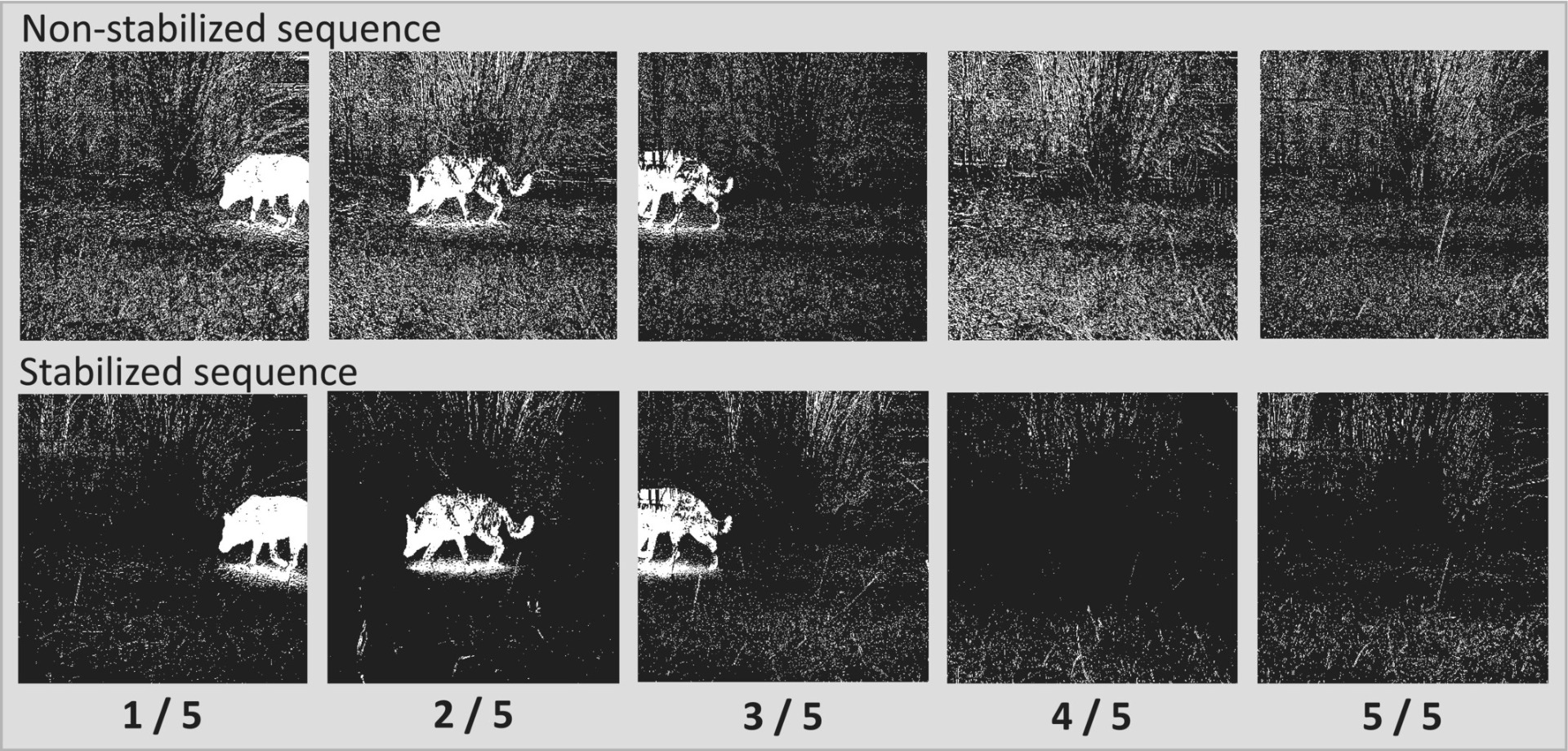

Figure3. An example of a stabilized camera trap sequence during a gust of wind. In the bottom row images, camera movement has been eliminated with algorithmic post-processing. A dog becomes more visible and there are fewer undesired background pixels. Naturally, the movement of vegetation does not disappear completely.

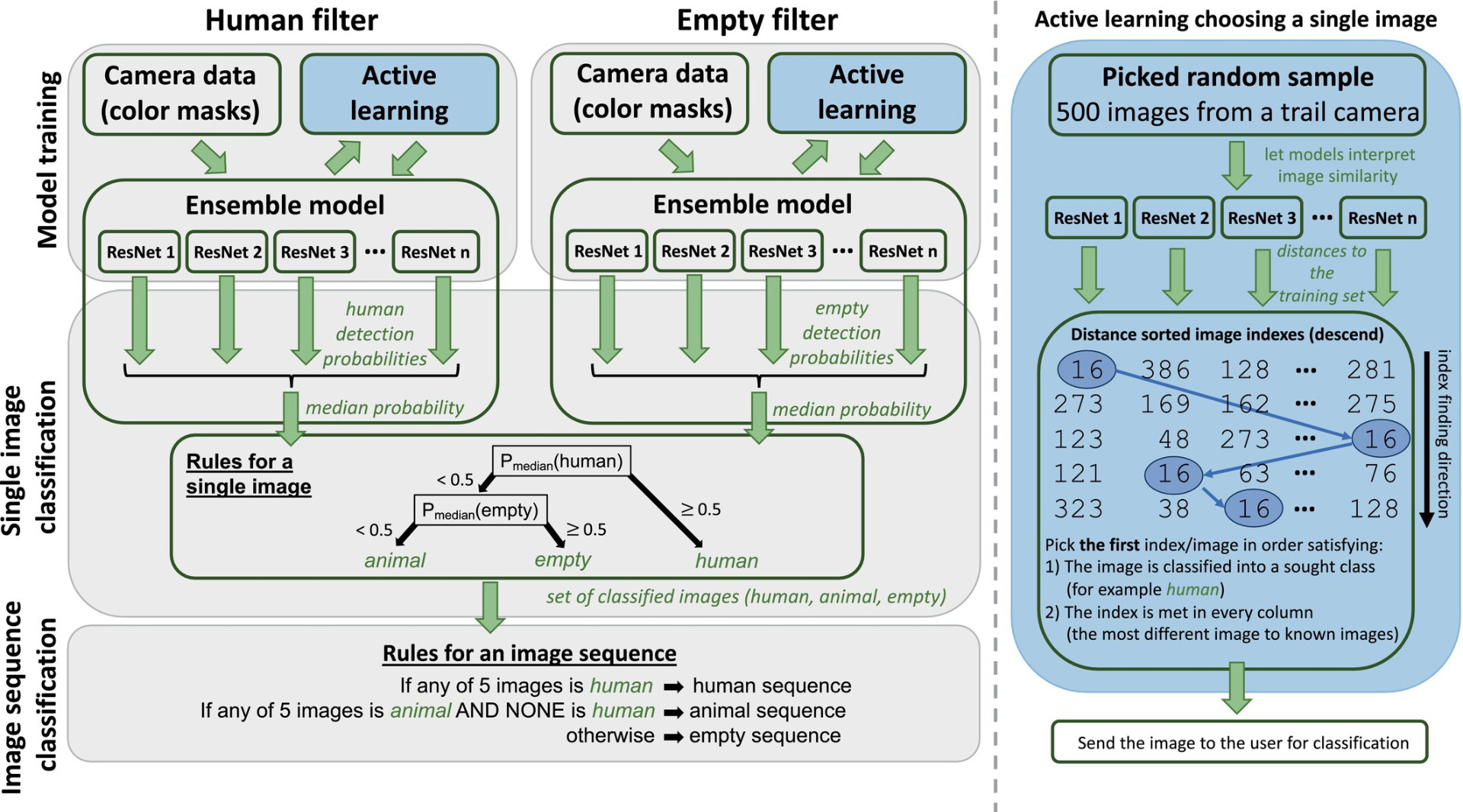

Figure 4. A basic diagram of a classifier structure showing the major components of our deep active learning classifier and how it classifies single images and image sequences. On the right, a simplified procedure diagram shows how the active ensemble learning module chooses a new image for user annotation. A distance-sorted image index table is searched row by row to find images to propose. The distances are not shown here, but only indexes of images. Each column corresponding to the outcome of a single ResNet is sorted independently.

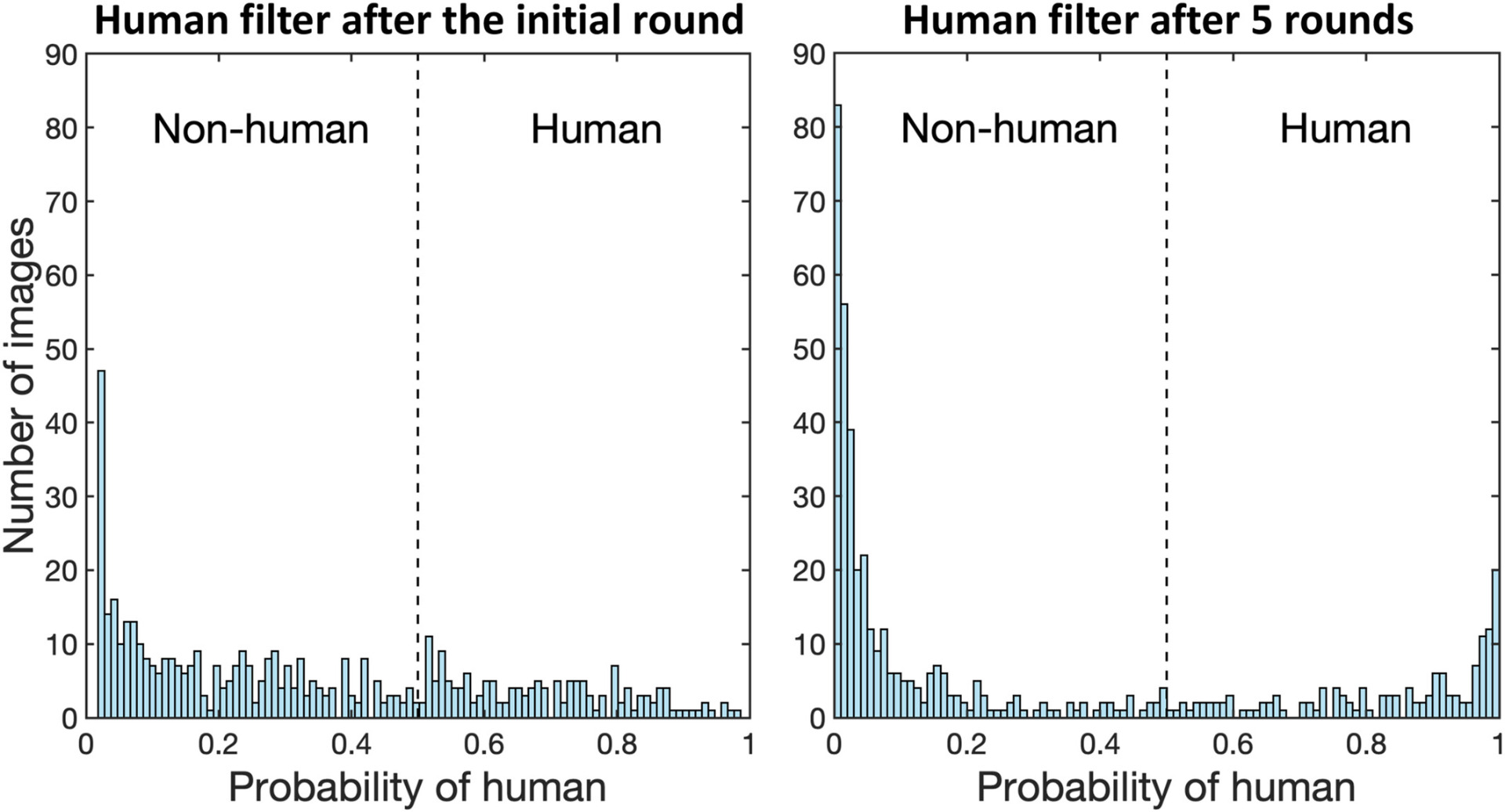

Figure 5. A user can follow the convergence of a filter during active learning from the score histograms of 500 randomly sampled images. Left panel: After the initial 2 × 20 user-selected images (the first round), a filter is fairly unsure about classification, which is reflected by median probabilities far from 0 and 1. Right panel: After five rounds (upsampling; 2 × 192 images) the filter starts to be more confident about classifications, indicated by a reduction in uncertainty. Sampled image sets are different in the two panels.

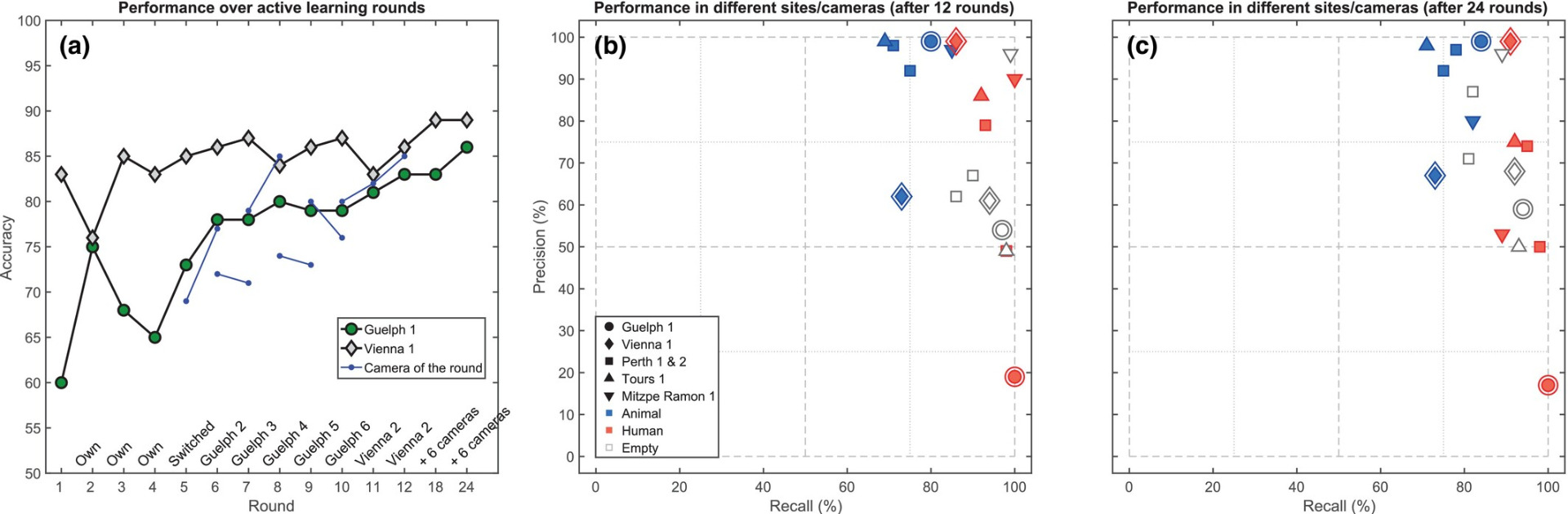

Figure 6. (a) Accuracy in sequence classification improves during the active learning process with two initial training cameras. Blue line segments show accuracy improvements from each new camera used in training—right before and after a training round. The corresponding camera names are marked on the x-axis. Accuracy is still improving between rounds 12 and 24. (b) Shows class-wise precisions and recalls for different cameras from various sites after 12 rounds. Double framed markers correspond to the cameras G1 and V1 of panel A and the legend lists marker shapes (sites) and colour codes (classes). (c) The situation after doubling the training data stays almost the same, except that the precision of the human class has decreased.

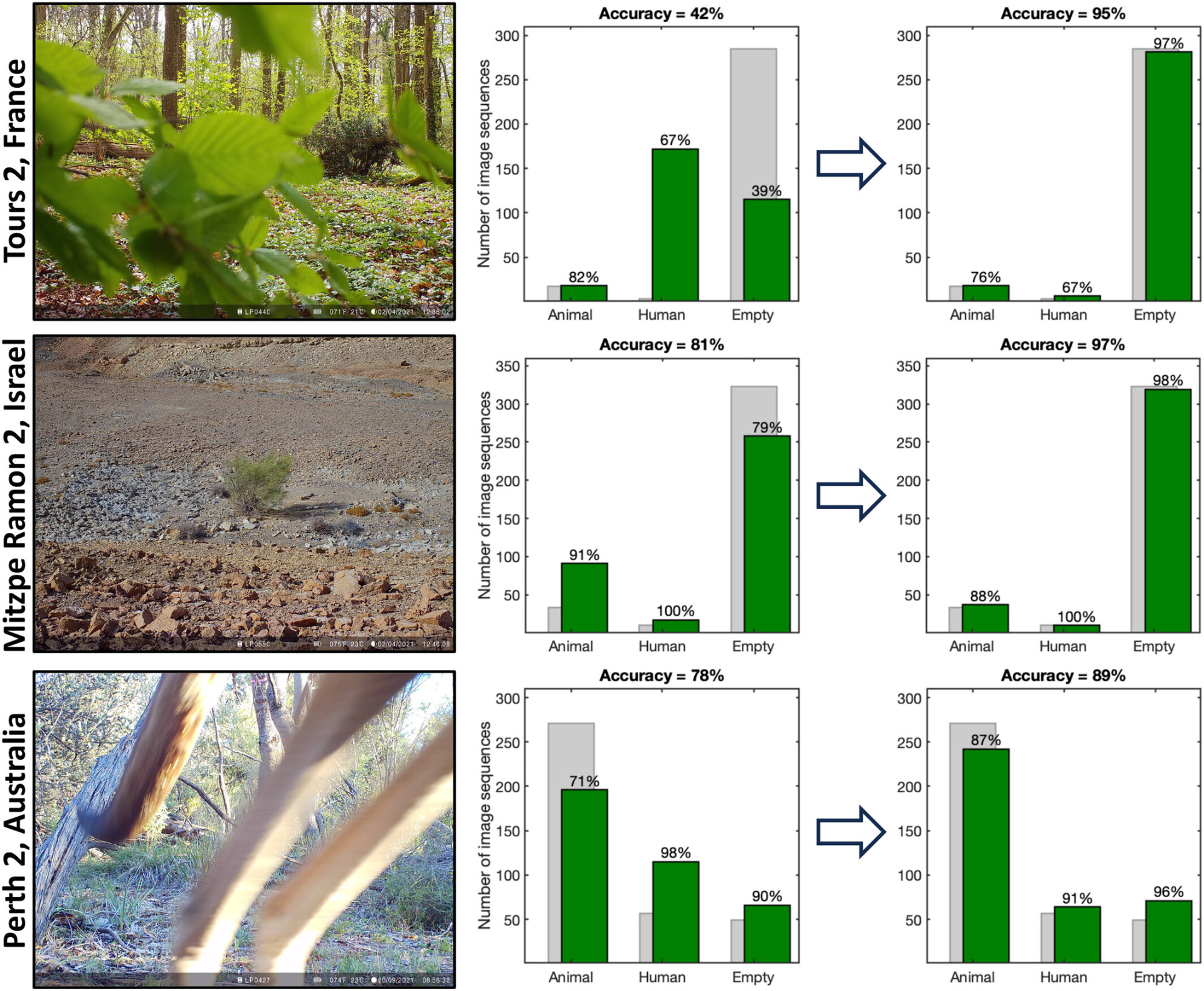

Figure 7. Sequence classification performance improves after additional training rounds on cameras having initially lower accuracy (T2, MR2 and P2). Example images from these cameras are shown in the leftmost column. Grey bars show the true amount of sequences in each class and green bars show classification results. In Tours, the movement of tree leaves is interpreted as possible humans (compare the bars at the top middle panel). In Mitzpe Ramon, the movement of a bush is interpreted incorrectly as animal movement. In Perth, the 12 round classifier interprets some kangaroo images as humans. After the additional training rounds, the performance increases considerably in each case (the rightmost column). Percentages shown are the recalls of each class; that is, what proportion of correct class sequences were found.

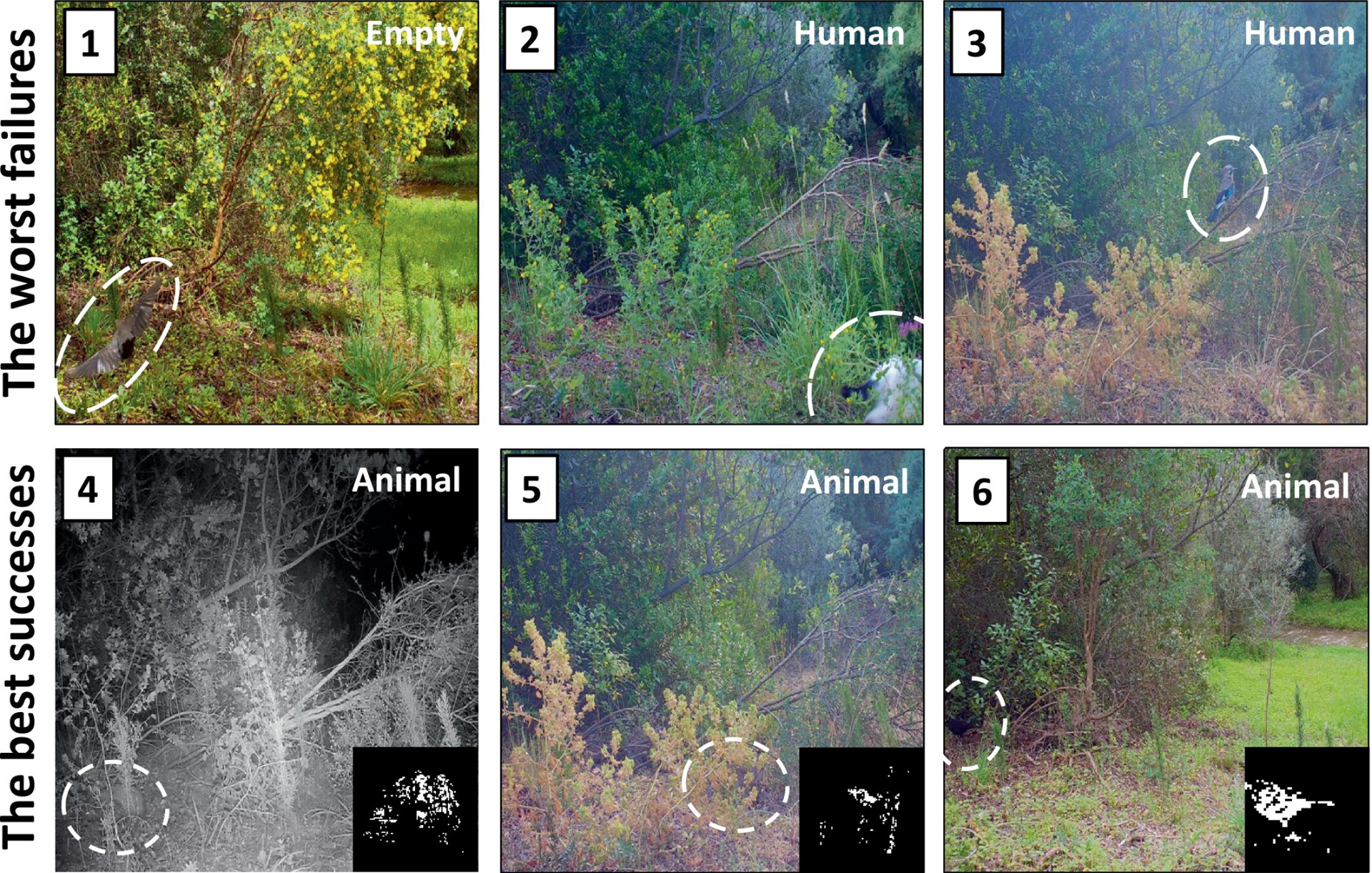

Figure 8. Examples of Lisbon camera classifications. Class predictions are shown in the top right corner of each image. On the top row (images 1–3) are the worst cases where the classifier failed to recognize highly visible animals. On the bottom row (images 4–6) are some of the most cryptic examples that our classifier recognized correctly. Zoomed B&W masks on the bottom right corner of each image show the movement pixels. By raising the human probability threshold to 80%, images 2 and 3 become correctly classified.